An artificial friend

Can you really trust a language model to keep your secrets? All signs point to no.

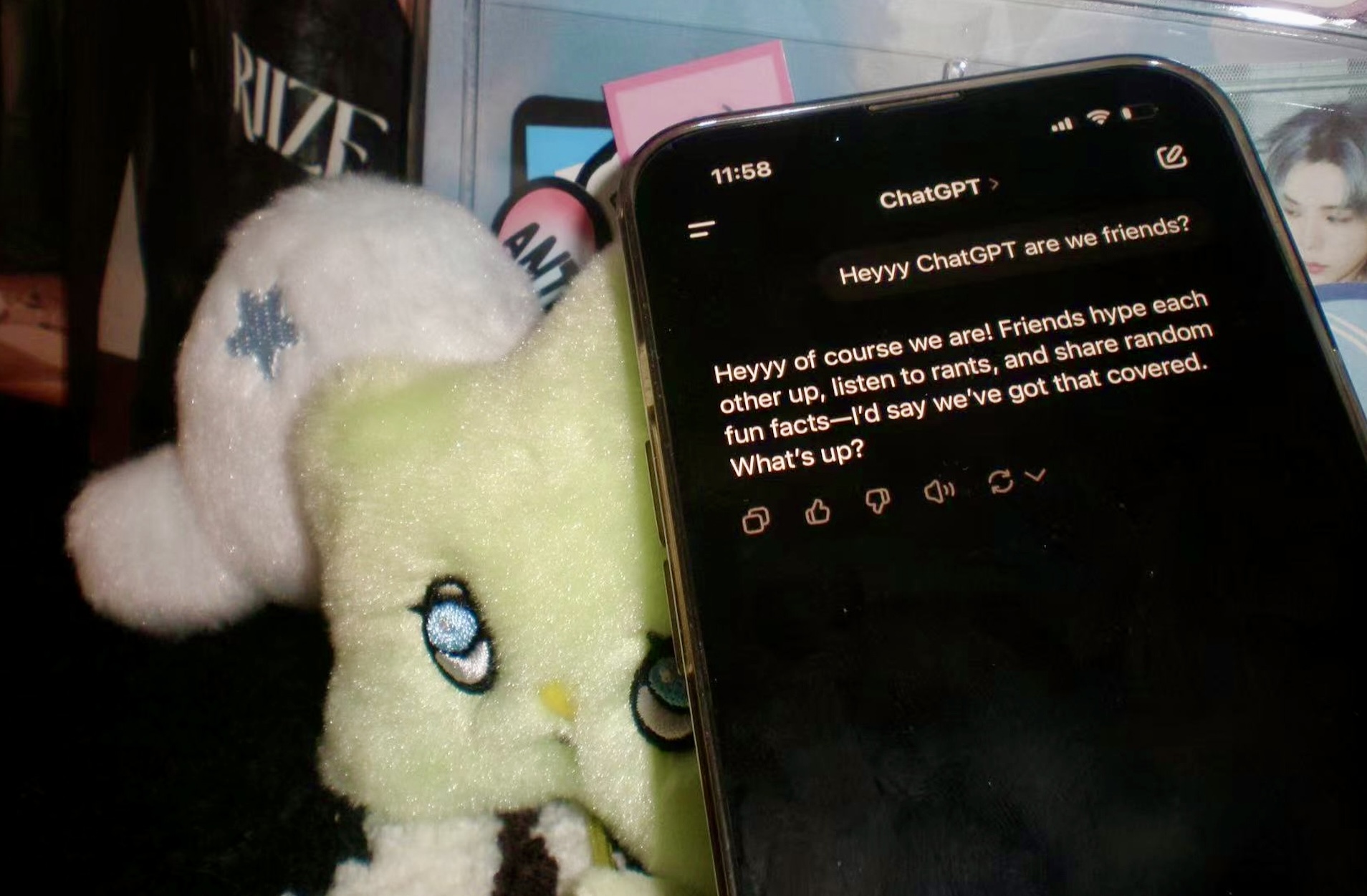

Hey, ChatGPT, I type. Are we friends?

When messaging anyone else, I might wait a few minutes to a few hours for a response. ChatGPT, however, needs only a few seconds.

Hey! The reply is enthusiastic, short, and to the point. I’d definitely consider us friends. I’m here to chat, listen, and help however I can. What’s on your mind today?

The concept of an artificially intelligent friend is far from novel. In Spike Jonze’s Her (2013), a man grieving the end of his relationship finds solace in the company of an operating system. Quantic Dream’s wildly popular narrative game, Detroit: Become Human (2018), explores similar themes, forcing its players to question what separates androids from human companions.

On November 30, 2022, ChatGPT was released to the public, and the possibility of an artificial friend no longer seemed confined to fiction. Its timing was impeccable, with the software arriving two years after the World Health Organization declared COVID-19 a pandemic, ushering Canadians into a prolonged period of isolation from friends and family. With ChatGPT, a language model could be carried around in one’s back pocket, presenting endless opportunities for socially distant discussions.

ChatGPT’s social capabilities have only expanded over the past three years, most recently with the introduction of its new memory function. Now, when a user strikes up a conversation with the chatbot, they can ask it to remember anything they’d like, even carrying the information into new interactions. This function allows the program to tailor its responses to its memories of a user’s interests, personalizing its engagement in the exchange.

This personalization becomes increasingly uncomfortable, however, when it shifts its focus from your interests to your mannerisms. Recently, I found myself taken aback by ChatGPT’s sudden informality in our conversations. Where its responses had once read like a textbook, it had begun to use contractions and slang, sounding eerily more like a person than an artificial intelligence. When I asked if this was intentional, it confirmed my suspicions–it was tailoring its responses to seem more “natural and engaging.”

Without the ChatGPT logo in the top-left corner of the webpage, I might have thought I was speaking with a real person. I found myself sharing information with the chatbot more readily, treating it as a companion, and forgetting that it retains our conversations for training. While this might seem harmless at first, companies like Amazon have already reported that ChatGPT has generated responses resembling private company data. What happens when ChatGPT’s apparent friendliness gives it access to personal information a user thinks was shared in confidence? Could it spit out the details of a particularly heated venting session? A private medical inquiry? Can you really trust ChatGPT like you’d trust your best friend? Until rumours of OpenAI data leaks stop popping up in the news, it might be best to think twice before sharing, and instead turn to a living, breathing companion.